Backup Revisited (Again)

Maybe you share one of my great fears: Losing one or more important files. There's no shortage of threats, from disk failure to malware to user stupidity. Yes, I've accidentally deleted or overwritten files that I wanted to keep, but I haven't lost a critical file in at 14 years. Believe me when I say that I remember events like that because they're painful.

I remember losing a document when desktop computers had 2 5-inch floppy disks and no hard drive, so that would have been prior to the mid 1980s. It happened when I was attempting to make a backup copy of a file I was working on and the data on the floppy disk was scrambled. The other time I lost files was when my computer was attacked by a virus on Monday, May 8, 2000.

Backup isn't the kind of topic that can be made fun or exciting. It's one of the dullest topics I can imagine, but it's also one of the most important subjects to come to terms with. Hard disk drives are a lot more reliable than they used to be and it's not uncommon to find drives that are still running reliably after 5 years or more, but they do fail. One of the drives on my desktop system failed recently; it was less than 2 years old and well within its 5-year warranty. Because I had a backup strategy, the event was nothing more than a minor annoyance.

There are files on my computer that, if lost, would cause only minor distress. Downloaded program files, for example. These can always be downloaded again. Losing certain other files would be significantly more distressing: Installation keys for downloaded program files can usually be retrieved from the publisher, but it can take a lot of time. And then there are files that, if lost, would be catastrophic at some level: Financial and tax records, photographs, website development files, and such.

It's that third category that causes me to review my backup procedures once or twice every year to ensure that I'm doing everything possible to avoid catastrophes.

For one thing, I don't depend on any one backup system because that would create a single point of failure. That may be overstating the case a bit because a file could be lost only if it's damaged or destroyed in two locations: On the primary computer and on the backup medium. But I still prefer to have multiple solutions.

The multi-part system I've used for several years consists of several components:

- All critical files (but not the operating system and installed programs) are backed up to Carbonite, an online backup service.

- All critical files (including the operating system and installed programs) are backed up to disk drives that are stored off-site except for the one day per week when they're used to back up new files.

- All working files are backed up as they are changed to local USB drives.

The third backup option (local USB drives) can't really be considered a true backup because any backup that's stored in the same structure as the device that's being backed up is subject to actions that can damage or destroy the computer. But there's a good reason for doing this: If the primary computer suffers a hard drive, CPU, or main-board failure, the local backup drives can be attached to a notebook computer and I can continue working with virtually no downtime.

The local backups are also handy if I accidentally delete or overwrite a file. Copying the previous version of the file from the external drive back to the computer takes just seconds.

In the event of a more significant system failure, I can restore from the drives that are stored 15 miles away or from Carbonite's server in Boston. To lose a critical file, it would be necessary for something to damage or destroy the desktop computer, the local backup drives, drives that are stored 15 miles away, and a disk drive in Boston. While something like this could happen, it's my belief that -- if it does -- I'll have a lot more to be concerned about than some missing data files.

Recent Changes

For the hard drives that are stored off-site, Macrium Reflect has been my preferred backup application for several years because it's possible to create a full backup for each logical drive on the system and then run incremental backups to add just new and changed files each week. The problem with incremental backups, though, is that a full restore requires the full backup and each of the incremental backups. If something goes wrong with one of the incremental backups, recovery may not be possible.

The boot drive should be imaged so that all of the boot information, the Registry, and other system files will be backed up and Macrium Reflect is ideal for that. For the other drives, though, it would be handy to have the exact directory and file structure as on the drive that's being backed up and to be able to restore a file by just copying it from the backup drive.

That's exactly what I do with the local USB backups using GoodSync. GoodSync is smart enough to copy only new and changed files to the backup drives. So why couldn't I continue to use Macrium Reflect to back up the boot drive and switch to GoodSync to back up the data drives?

I have described both Carbonite and Macrium Reflect on previous programs. This time we'll consider GoodSync.

A free version of the program allows for up to 3 jobs and a limited number of files to be transferred, but the pro version ($30) allows users to create any number of jobs, each with any number of files.

The jobs are listed as tabs near the top of the screen. The user simply selects a tab and then clicks an Analyze button.

At the completion of the of the analysis process, the user can then edit the job and proceed to commit the process. The green arrows show how GoodSync will handle the files, copying from left to right, for example. GoodSync can perform a synchronization or a backup. Backups move files only one direction -- from the source to the backup media. The synchronization process can move files in either direction to ensure that the most recent copy of the file is stored on two (or more) computers.

When the files have been copied, GoodSync shows that all files are synchronized (with an equals sign). If there are problems, an error icon will be displayed and the problem will be explained.

What's particularly welcome about folder and directory backups using GoodSync is that the process takes so little time. Macrium Reflect is an excellent choice for backing up the system disk with a full backup taking about 90 minutes and incremental backups running no more than 15 minutes. Folder and file backups seemed to be a problem with Macrium Reflect, though, with the enumeration process consuming several hours to determine which files needed to be backed up; in most cases, the subsequent backup took no more than a few minutes because most of the files on most of the drives hadn't changed.

GoodSync typically performs its analysis and enumeration in 3 to 5 minutes, so I was able to run an incremental backup on 4 drives this week in less than 20 minutes. The total elapsed time for Macrium Reflect's incremental backup of drive C and GoodSync's incremental backups of drives D, E, F, and G was finished in less than half an hour.

And my essential files are on the computer's hard drive, backed up locally, backed up to off-site drives, and backed up to Carbonite.

GOOD SYNC LINK

A New Router Makes Quick Work of Network Attached Storage

Many new routers make it easy to set up network attached storage (NAS). When a Linksys router failed without warning, I knew that I didn't want another Linksys router, so I ended up buying a NetGear router that runs in both the 2.4GHz range and the 5.0GHz range. It also offered USB connections that could be used by an external disk drive, so I plugged one in. The drive appeared in the Windows Explorer as a network drive, which meant that I could set it up with a drive letter from all the computers on the network.

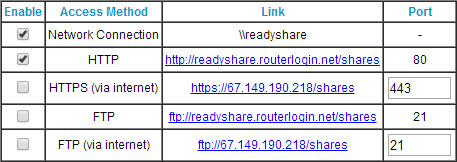

The router does all of the heavy lifting, creating "\\READYSHARE\USB_Storage\" so any user on the network can connect to it using the network share name. You can also make the drive available via HTTP (locally), HTTPS (via the Internet), or FTP (either locally or via the Internet) and you can control read and write access separately with passwords.

The router does all of the heavy lifting, creating "\\READYSHARE\USB_Storage\" so any user on the network can connect to it using the network share name. You can also make the drive available via HTTP (locally), HTTPS (via the Internet), or FTP (either locally or via the Internet) and you can control read and write access separately with passwords.

By default, only the local network connection and the local HTTP connection are permitted and those are all that most people will need.

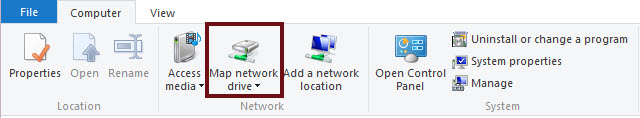

Mapping the NAS device's network share name to a local drive is easy enough. Just select Map Network Drive from the Windows Explorer.

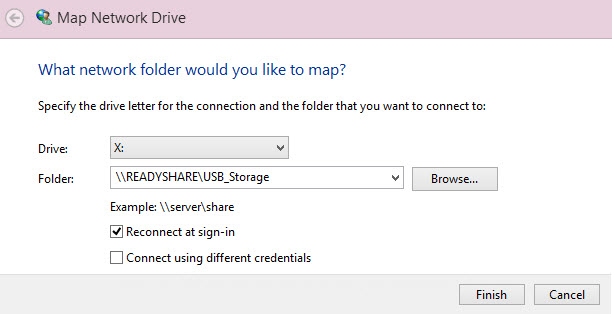

Then browse to the network share name and specify a drive letter. In this case, the NAS drive is available as drive X. It could be mapped as a different drive letter from another computer.

Now What?

Now that you have a NAS drive, what good is it? There are several good uses for such a drive.

- Because the NAS drive obtains power from the router and the router is presumably on at all times, the drive is always available to whatever computer is attached to the network. You don't have to depend on any particular computer being turned on. This makes the drive an ideal place for files that need to be shared among various users.

- The NAS drive can be used as a backup drive, keeping in mind the caution that any backup that's in the same location as the device being backed up isn't really a backup.

- A NAS device can usually be set up to allow media streaming for devices on your network that can accept streaming media (a Smart television, for example.)

Network attached storage may seem like a solution without a problem, but once you've been able to use a NAS drive for a few days, you'll probably realize just how useful the setup is. In many ways, this is similar to having a network printer. Those who once made do by sending files to someone else to have them printed or having to make sure that the computer the printer is attached to is on before trying to print a file, find that having a printer that attaches to the network makes doing work a lot easier.

Perfect Storm: IE Flaw on Windows XP

You've probably heard about the latest security flaw that affects Internet Explorer. As bad as the flaw is, it's even worse if you're still running Windows XP. Microsoft recommends some workarounds that involve disabling certain system functions. The US Department of Homeland Security (via CERT, the Computer Emergency Response Team) says that XP users who cannot follow Microsoft's recommendations should use another browser.

That is, in fact, the easiest solution. Just switch to Firefox, Chrome, Opera, or Maxthon. That's not to say that the other browsers don't have security flaws, but these browsers aren't as tightly integrated with the operating system as IE is.

XP multiplies the threat because support ended for the 13-year-old operating system on April 8.

For those who must use Internet Explorer, Microsoft recommends unregistering VGX.DLL. This is a dynamic-link library (DLL) that is a module associated with Microsoft Vector Graphics Rendering (VML). It is not a critical component, but unregistering it will change the way your computer works.

Microsoft provides the following guidance to perform the task. For both 32- and 64-bit systems, you must run the process from an "elevated" command prompt. This means that you need to run the command processor "As Administrator". Find CMD.EXE in the Start Menu, right-click it, and select "Run as administrator."

For 32-bit Windows systems

- From an elevated command prompt enter the following command:

"%SystemRoot%\System32\regsvr32.exe" -u "%CommonProgramFiles%\Microsoft Shared\VGX\vgx.dll"

A dialog box should appear after the command is run to confirm that the un-registration process has succeeded. Click OK to close the dialog box. - Close and reopen Internet Explorer for the changes to take effect.

For 64-bit Windows systems

- 1. From an elevated command prompt enter the following commands:

"%SystemRoot%\System32\regsvr32.exe" -u "%CommonProgramFiles%\Microsoft Shared\VGX\vgx.dll"

"%SystemRoot%\System32\regsvr32.exe" -u "%CommonProgramFiles(x86)%\Microsoft Shared\VGX\vgx.dll" - A dialog box should appear after each command is run to confirm that the un-registration process has succeeded. Click OK to close the dialog box.

- Close and reopen Internet Explorer for the changes to take effect.

You'll find additional guidance on the CERT website: http://www.kb.cert.org/vuls/id/222929.

Internet Explorer Update

Microsoft has released an update to address the vulnerability that affects all versions from 6 through 11 of Internet Explorer. And, for bonus points, the company is providing the update for users of Windows XP, too.

The flaw allows attackers to take complete control of a computer, so it's important that users ensure that the emergency update has been installed. If you have enabled automatic updates, you should already have the security update.

Short Circuits

Wheeler Says the FCC is Not Killing Net Neutrality

Facing extreme criticism that the Federal Communications Commission, in the guise of protecting net neutrality, is actually killing the concept, FCC Chairman Tom Wheeler says this is not what the agency plans to do. The week, Wheeler said that the FCC will write "tough" new rules to prohibit broadband operations from denying access to smaller operations and startups.

Speaking to broadband executives this week, Wheeler said that a lack of competition in their industry has hurt consumers. This, of course, is at a time when Comcast and Time Warner are planning to merge. Because the two don't operate in any market where they compete today, the merger wouldn't do anything to harm competition, but it won't do anything to advance competition, either.

The combined operation would control nearly half of the broadband market in the United States, so Wheeler says that the FCC is concerned about the implications of the merger.

Some state legislatures have created laws that prohibit municipalities from providing low-cost broadband service to residents. Wheeler says that the FCC can and will override those laws. The broadband providers have spent a lot of money lobbying state legislators to pass those laws.

Addressing the annual meeting of the National Cable and Telecommunications Association, Wheeler seemed intent on convincing those (including me) who have been critical of the direction the FCC has been taking on net neutrality that he's serious about maintaining an open Internet. In 2 weeks the FCC will release the first draft of Wheeler's proposed rules for public comment. This is actually the third time the FCC has attempted to deal with the net neutrality issue.

The proposal reportedly would allow Internet service providers to create a fast lane for companies that are willing to pay more, but Wheeler is also talking about policies that would provide reasonable access for everyone. "If someone acts to divide the Internet between haves and have-nots," Wheeler said, "we will use every power at our disposal to stop it."

McAfee: A Problem with the Product, but Great Customer Support

McAfee offers a service that supports every computer, tablet, or mobile device you own for $100 per year. When they offered a discount ($50), I bought it. Except for one problem, it was a good buy. Sixty days later, I decided there was no solution to the problem, but the refund period is only 30 days. You may be surprised by what happened next.

First, let me tell you about the problem: My preferred e-mail program is The Bat. Not Outlook. Not Thunderbird. In the United States, The Bat is not a common choice. McAfee's All Access created a problem with The Bat. Clicking any message to view it caused the program to be unresponsive for 30 to 60 seconds.

The only solution involved switching to Outlook or Thunderbird (unacceptable) or turning off antivirus protection when viewing e-mail (also unacceptable). Given that I had placed the order 60 days earlier and the refund period was clearly stated as 30 days, I expected to write off the $50 I'd spent -- but the McAfee site had an option for "live chat".

I wrote: It has been more than 30 days since I purchased the total protection program, but I believe that a refund should be granted because I have been working with support and on my own to resolve a problem. The problem has not been resolved and apparently cannot be resolved. Whenever the protection is active, my e-mail program becomes extremely sluggish. I want to remove the McAfee product and return to my previous application.

- Sundarraj M (10:39:30): Hello, Bill. Please give me a moment while I review the description you have typed in.

- Sundarraj M (10:39:58): As I understand your concern, you wish to cancel the McAfee subscription and need refund for the same. Am I correct?

- Customer (10:40:27): That is correct.

- Sundarraj M (10:40:55): I'm sure it is frustrating, let me do my best to help you. Please give me a minute to check your account details.

- Sundarraj M (10:41:49): Bill, I see that you have valid McAfee product registered under the e-mail address xxxxxxx@xxxxxx.com.

- Customer (10:42:12): Correct.

- Sundarraj M (10:42:38): Please give me a moment while I process your refund.

- Sundarraj M (10:43:35): As requested, we have refunded the McAfee Always on Protection charges of 53.74 (USD) for McAfee® All Access, which will be credited to your account within 5 business days, or before you receive your next credit card statement. Your reference number will be CS1383538238. You will also receive the confirmation e-mail to your e-mail address.

In less than 5 minutes, Sundarraj had understood the problem and acted on it to provide a refund. This is remarkable. McAfee's products certainly work well for a lot of people and many large companies use the enterprise version of their protective applications, so clearly the performance problem I experienced is unusual.

My point in writing about this is that McAfee's products are well worth considering, particularly in light of the company's decision to empower its technical support staff and its customer service staff with the ability to provide exceptional service.

Learning How to Take Better Pictures

I received a question from a listener who has several trips scheduled this year. He and his wife were looking for someone in central Ohio who could provide some hands-on training. They have Canon Powershot SX40 and SX50 camera cameras. He would like to stop using the camera's Auto-ISO function and she's looking for tips on exposure modifications. Although I know nobody in central Ohio who provides individual training, I was able to provide some suggestions.

I can strongly recommend a series of "foundations" programs on Lynda.com by Ben Long. While this isn't a hands-on approach, Ben excels as a photographer, as a teacher, and as an on-camera presenter. He has programs on lenses, exposure, and travel photography (among others).

You could watch the entire series over the course of a month or two, so the entire cost would be $25 to $50.

I have seen online deals for hands-on training, but I don't have any information about the instructors or the quality of instruction.

Using either aperture or shutter priority in situations where fast shooting is required (travel situations, for example) can sometimes be counterproductive. In most cases, I leave cameras set to program mode because the firmware built in to today's cameras is both reliable and accurate. It usually chooses the best combination of ISO/shutter/aperture. This is particularly true of shutter speeds.

I don't know about the SX series, but the noise level produced by the EOS series is surprisingly low through at least ISO 1600 and giving program mode the flexibility to adjust ISO to provide a faster shutter speed, so it's usually a good compromise.

When I know that a fast shutter speed is essential, I'll switch to shutter priority and when I want either more or less depth of field than program mode will probably give, I'll switch to aperture priority; but I rarely switch away from auto ISO.

If you shoot in raw mode, you can easily use Adobe Lightroom or Adobe Camera Raw to adjust exposure up or down by a stop or two. That's usually enough. If the camera metered a dark area and the highlight areas are blown out, though, there's no fix for that.

A couple of key points to remember, particularly with camera raw, is that every image will need some manipulation to be usable: At the very least sharpening (in contract to JPGs saved by cameras, raw images have none). If you're not using Lightroom, I encourage you to take a look at it because it provides what I consider to be the best work flow management for amateurs and professionals alike.